参考文献:https://github.com/xiantang/Spider/blob/master/Anti_Anti_Spider_521/pass_521.py

写在前面的话

Python在爬虫方面的优势,想必业界无人不知,随着互联网信息时代的的发展,Python爬虫日益突出的地位越来越明显,爬虫与反爬虫愈演愈烈。下面分析一例关于返回HTTP状态码为521的案例。

案例准备

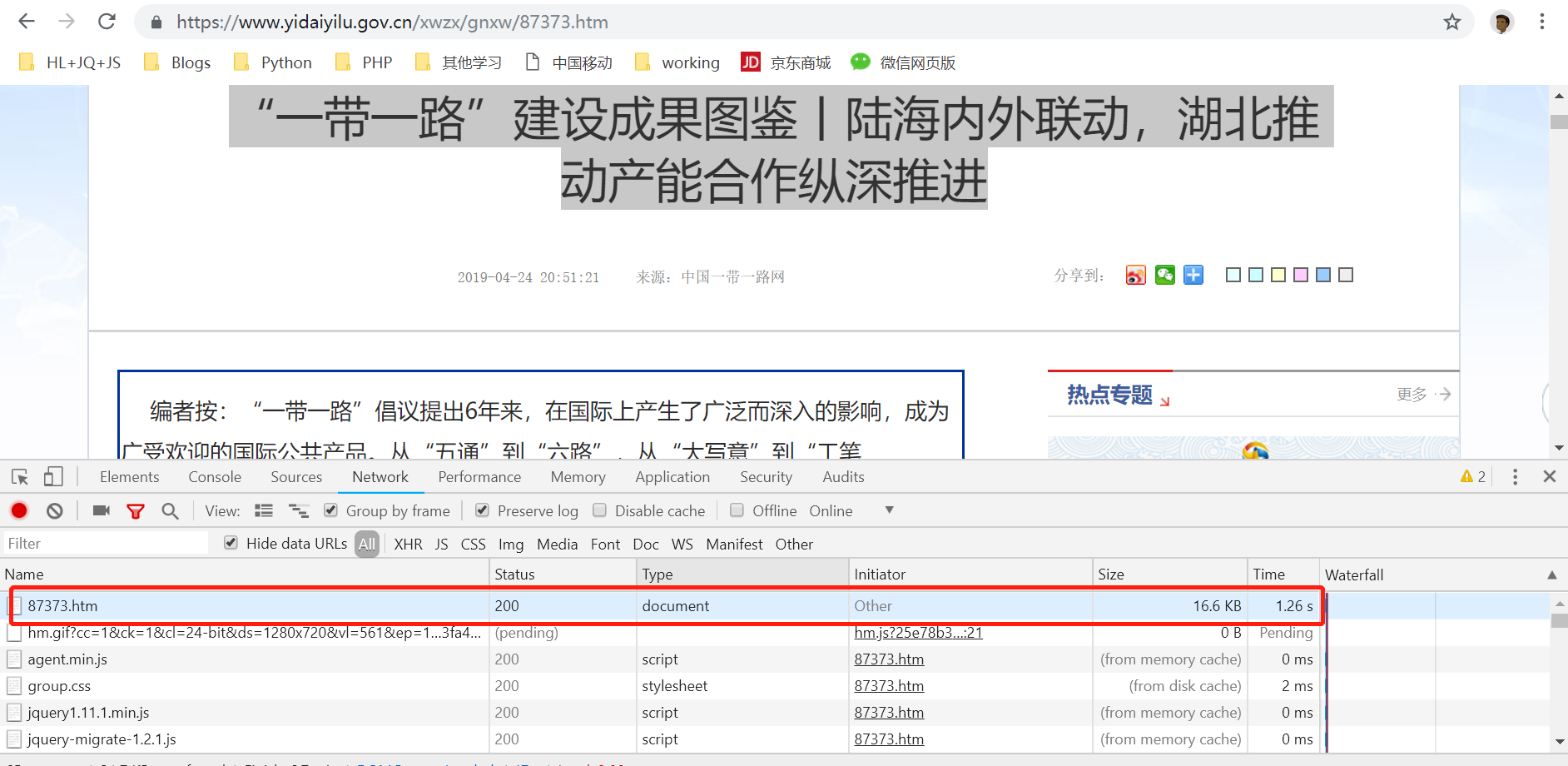

- 案例网站:【中国一带一路官网】, 以抓取文章【“一带一路”建设成果图鉴丨陆海内外联动,湖北推动产能合作纵深推进】为例,进行深度剖析。

案例剖析

1) 浏览器访问【“一带一路”建设成果图鉴丨陆海内外联动,湖北推动产能合作纵深推进】:

2)写ython代码访问,查看http(s)返回状态

1 | # coding:utf-8 |

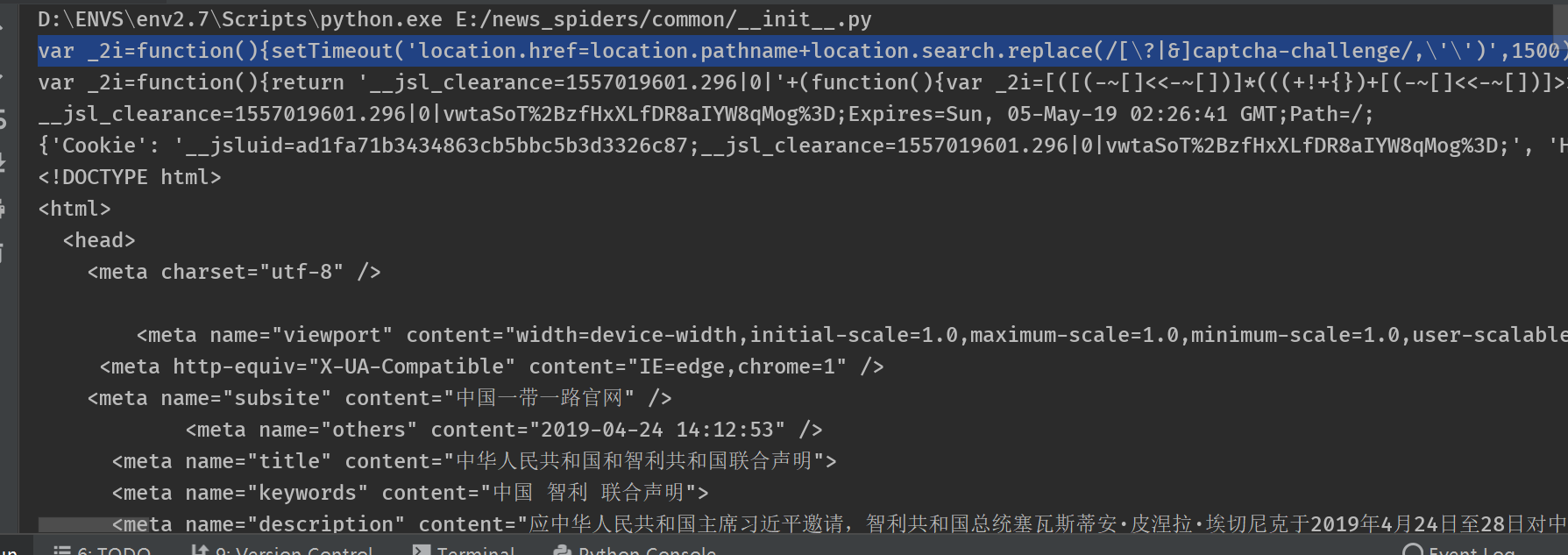

不幸的是,返回的http的状态码却是501,text为一段混淆的js代码。

3)百度查资料,推荐为文首的【参考文献】

继续参照资料修改代码,Python执行JS首选execjs,pip安装如下:1

pip install PyExecJS

将请求到的js执行:

1 | text_521 = ''.join(re.findall('<script>(.*?)</script>', resp.text)) |

将返回的结果print发现还是一段JS,标准格式化(【格式化Javascript工具】),结果如下所示:

1 | var _2i = function () { |

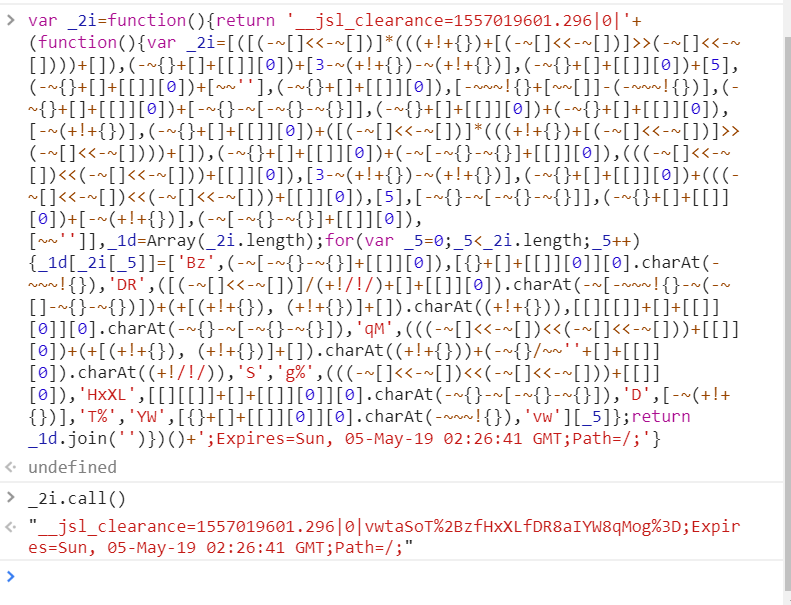

4)修改与浏览器相关的代码,然后放入浏览器的console进行调试。

注意,在调试过程中,不难发现,js变量是动态生成的。最初还嵌套有document.createElement('div'),Python的execjs包不支持处理这类代码,需要做相应处理。

5)综上分析,完整代码如下:

1 | #!/usr/bin/env python |

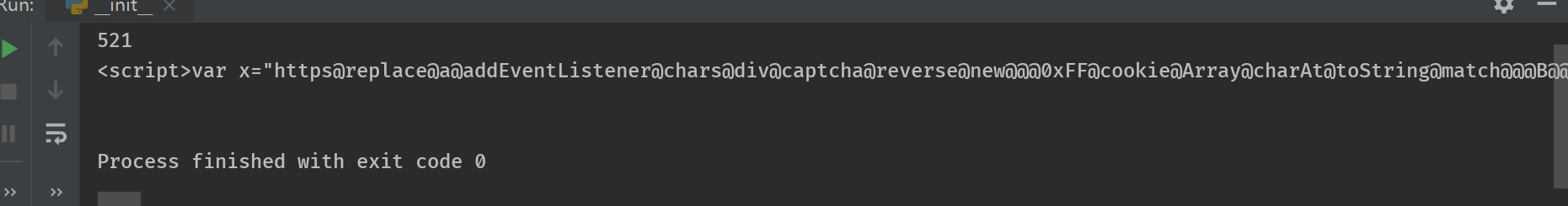

运行结果如下: